Publication Overview

This paper, presented at the IEEE 55th International Midwest Symposium on Circuits and Systems (MWSCAS) in 2012, introduces a novel approach to one of the most persistent challenges in neural network learning: catastrophic interference (also known as catastrophic forgetting).

The Problem: Catastrophic Interference

Catastrophic interference refers to the drastic loss of previously learned information when a neural network is trained on new or different information. Unlike gradual forgetting that occurs when a network reaches its learning capacity, catastrophic interference causes rapid information loss even when the network has plenty of remaining capacity. This phenomenon is particularly problematic in scenarios involving:

- Non-stationary input streams

- Continuous learning environments

- Real-world applications where data distributions shift over time

The problem has been studied for over two decades across machine learning, cognitive science, and psychology, yet remained a significant barrier to deploying neural networks in dynamic environments.

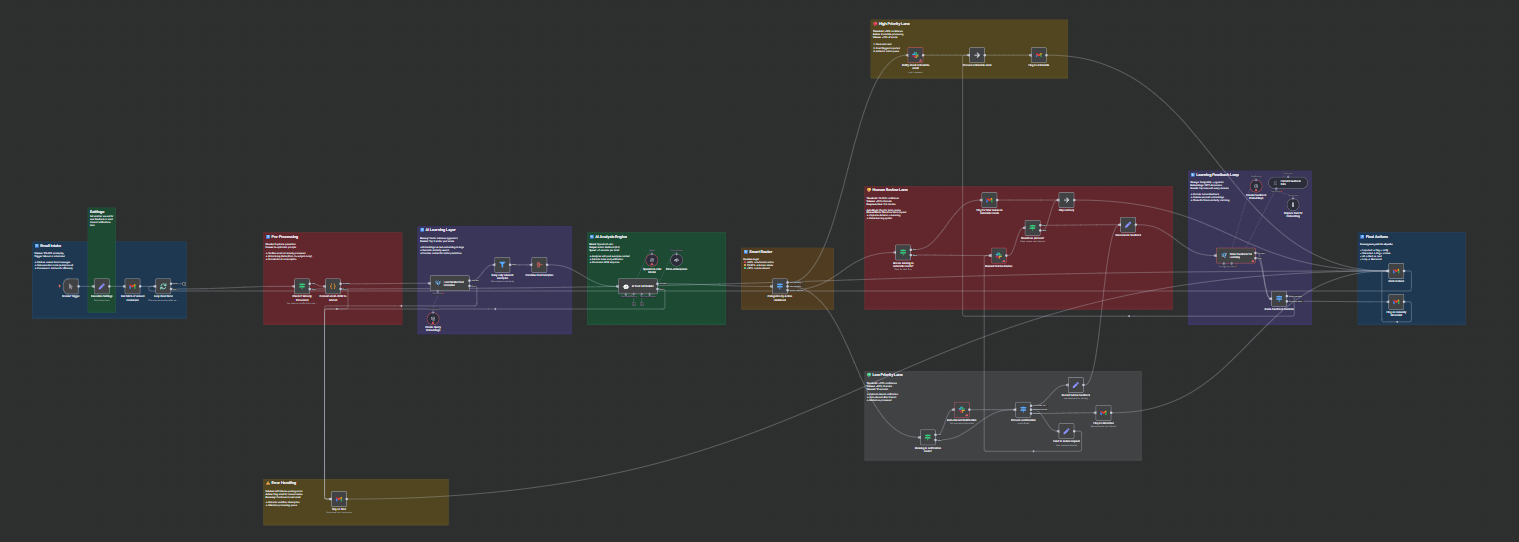

Our Solution: The Fixed Expansion Layer Network

We propose augmenting traditional multi-layer perceptron (MLP) networks with a Fixed Expansion Layer (FEL) - a dedicated sparse layer of binary neurons that addresses the representational overlap problem underlying catastrophic forgetting.

Key innovations include:

-

Fixed Weight Initialization: The FEL weights are set at network creation and remain unchanged during training. Each FEL neuron receives input from only a portion of hidden layer neurons, creating sparse signal expansion.

-

Neuron Triggering Mechanism: During training, only a small portion of FEL neurons are activated based on their agreement or disagreement with the hidden layer signal. This selective activation protects hidden layer weights from portions of the backpropagation error signal.

-

Binary Activations: Triggered neurons receive constant activation values rather than their computed values, effectively dividing the learning process into two complementary parts.

-

Low Computational Overhead: Unlike other approaches such as pseudorehearsal or dual-weight methods, the FEL adds minimal computational and storage requirements.

Comparison with Existing Approaches

The paper compares FEL against several established methods:

- Rehearsal Methods: Store and periodically retrain on previous patterns (high memory cost)

- Pseudorehearsal: Generate and retrain on pseudo-patterns (increased computation)

- Dual Methods: Maintain separate short-term and long-term memory representations (drastically increased complexity)

- Activation Sharpening: Reduce representational overlap through activation modification (50% computational increase)

Key Results

Experimental evaluation on a cluster classification task with non-stationary training inputs demonstrated:

- Superior Accuracy: FEL consistently achieved the highest classification accuracy across all levels of non-stationarity

- Linear Degradation: Unlike standard neural networks that show exponential accuracy decay, FEL exhibits roughly linear degradation as non-stationarity increases

- Significant Improvements: At 50% non-stationarity, FEL achieved 85% accuracy compared to 51% for standard NNs, 66% for pseudorehearsal, and 50% for activation sharpening

Research Impact

This work laid the foundation for subsequent research on sparse coding approaches to catastrophic forgetting, including ensemble methods and feature-sign search optimizations. The low resource requirements make FEL particularly suitable for large-scale implementations.

Authors

- Robert Coop (University of Tennessee, Knoxville)

- Itamar Arel (University of Tennessee, Knoxville)

Citation: R. Coop and I. Arel, “Mitigation of Catastrophic Interference in Neural Networks using a Fixed Expansion Layer,” Proceedings of the IEEE 55th International Midwest Symposium on Circuits and Systems (MWSCAS), 2012.