Publication Overview

This paper, published in IEEE Transactions on Neural Networks and Learning Systems in 2013, addresses one of the fundamental challenges in neural network learning: catastrophic forgetting. When neural networks are trained on new patterns for an extended period, they tend to forget previously learned mappings - a phenomenon that severely limits their applicability to real-world scenarios involving nonstationary data streams.

The Problem: Catastrophic Forgetting

Catastrophic interference occurs when a neural network loses its ability to recall prior representations after being trained on new information. This is particularly problematic in applications such as:

- Financial time series analysis

- Climate prediction

- Any scenario with piecewise stationary or nonstationary data

Unlike biological systems that can retain long-term memories while learning new information, traditional neural networks suffer from drastic information loss when exposed to shifting input distributions.

Our Solution: Fixed Expansion Layer Networks

We introduce the Fixed Expansion Layer (FEL) neural network architecture, which augments traditional multilayer perceptron networks with a sparsely encoded hidden layer specifically designed to retain prior learned mappings. Key innovations include:

-

Sparse Encoding: The FEL uses fixed weights that expand dense hidden layer signals into sparse representations, selectively gating weight update signal propagation through the network.

-

Feature-Sign Search Algorithm: We frame the FEL within the sparse coding domain and apply an efficient optimization algorithm that significantly improves accuracy while reducing the number of tunable parameters.

-

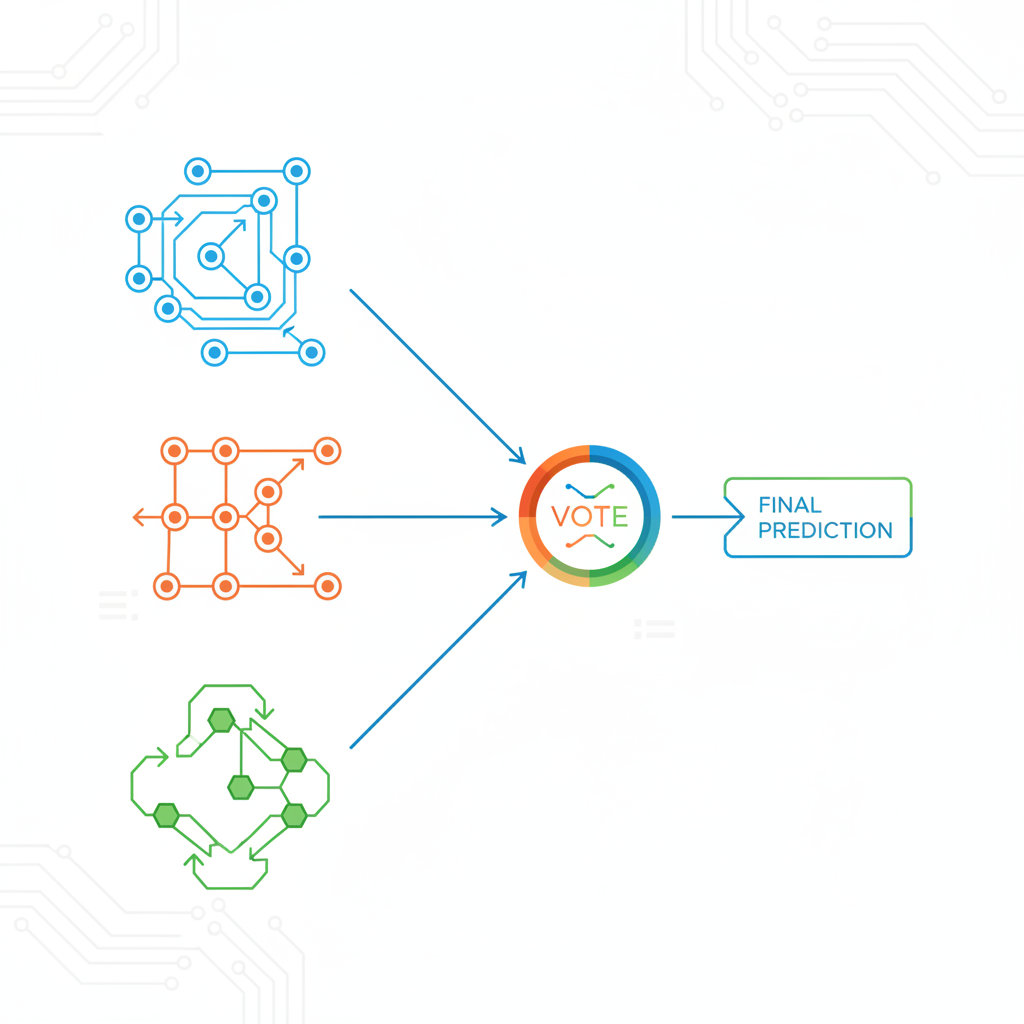

Ensemble Learning Framework: We propose training ensembles of FEL networks using the Jensen-Shannon Divergence as an information-theoretic diversity measure to further control undesired plasticity.

-

Confidence Estimation: Each learner in the ensemble estimates its own deviation from the target, allowing weighted aggregation that improves overall accuracy.

Key Results

Our experiments demonstrate significant improvements over existing approaches:

- Traditional Catastrophic Forgetting Tasks: FEL outperforms standard MLPs, pseudorehearsal, and activation sharpening methods

- Nonstationary Gaussian Classification: FEL maintains higher accuracy across varying levels of nonstationarity with linear (rather than exponential) accuracy degradation

- MNIST Digit Classification: Both FEL and FEL-FS (with feature-sign search) significantly outperform standard MLPs under nonstationary conditions

- Ensemble Performance: Combining FEL networks with JSD-based diversity and error-weighted voting achieves the highest accuracy, particularly at high nonstationarity levels

Research Impact

This work was supported by the Intelligence Advanced Research Projects Activity (IARPA) via Army Research Office and the National Science Foundation. The proposed framework has direct applications to many real-world machine learning tasks, including time series prediction and anomaly detection.

Authors

- Robert Coop (University of Tennessee, Knoxville)

- Aaron Mishtal (University of Tennessee, Knoxville)

- Itamar Arel (University of Tennessee, Knoxville)

Citation: R. Coop, A. Mishtal, and I. Arel, “Ensemble Learning in Fixed Expansion Layer Networks for Mitigating Catastrophic Forgetting,” IEEE Transactions on Neural Networks and Learning Systems, 2013. DOI: 10.1109/TNNLS.2013.2264952